Chatbots and AI Agents

Our specialist AI understands your customer & guides them to solve enquiries in channel

- Diagnose, triage & book repairs & complaints

- Ethical AI that's secure and auditable

- Available as an API, Chatbot or MS Copilot

Learn more

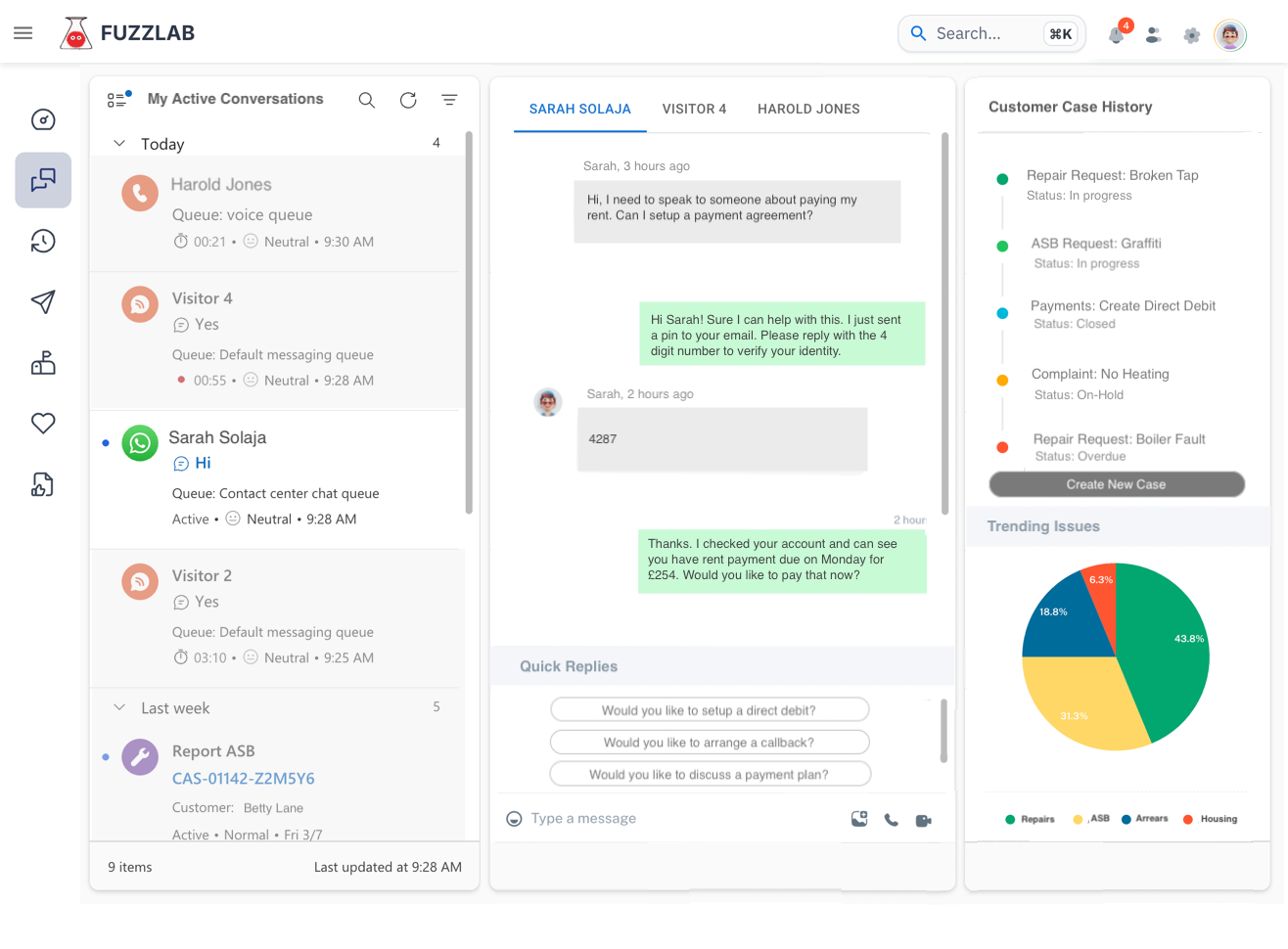

AI Agents & Messaging

Improve Engagement & Increase Satisfaction

Don't leave customers feeling abandoned and on hold. Connect with them on the channels they use every day.

Fuzzlab’s AI-powered digital agents and event-driven messaging put you in control, delivering guided customer experiences that save time, drive clarity and build trust.

Make every interaction count

Listen at scale & respond instantly in any language, with AI that understands your customers, your policies & your services

Insight into every interaction

Customers

CSAT

Cost Savings

Our specialist AI understands your customer & guides them to solve enquiries in channel

Learn more

Simplify operational process & spend more time with the customers that need you most

Learn more

Reach out and interact with customers on the channels they trust and use everyday

Learn more

We started Fuzzlab to help social housing providers diagnose repairs more accurately, using Machine Learning.

With the help of visionary social housing leaders, our MVP quickly evolved into a set of customer service agents.

Today, we offer a complete platform, combining AI automation, asynchronous digital messaging, and a unified contact centre that enables better customer outcomes, reduces pressure on staff, and keeps customers engaged.

Discover how our automation tools and unified contact centre can transform your customer service.

Fuzzlab can be deployed with or without a CRM. If you have one, we can integrate. If you don’t, we can still help you automate and unify your communication channels.

Yes. Fuzzlab supports WhatsApp, SMS, voice, email and web chat out of the box. Residents can contact you however works best for them.

Absolutely. Fuzzlab follows ISO 27001 best practices, offers UK-hosted options, and supports GDPR-compliant data handling throughout.

You can launch your first AI assistant and digital channels, like WhatsApp, within days. A full contact centre takes a little longer, with implementation starting at 6 weeks.

One of our founding objectives was to give customers a warm, fuzzy feeling. It’s a symbol of great customer service and reflects our mission to make public services less intimidating and more accessible.